Grading checklist

Part 1: Homography estimation

- Describe your solution, including any interesting parameters or implementation choices for feature extraction, putative matching, RANSAC, etc.

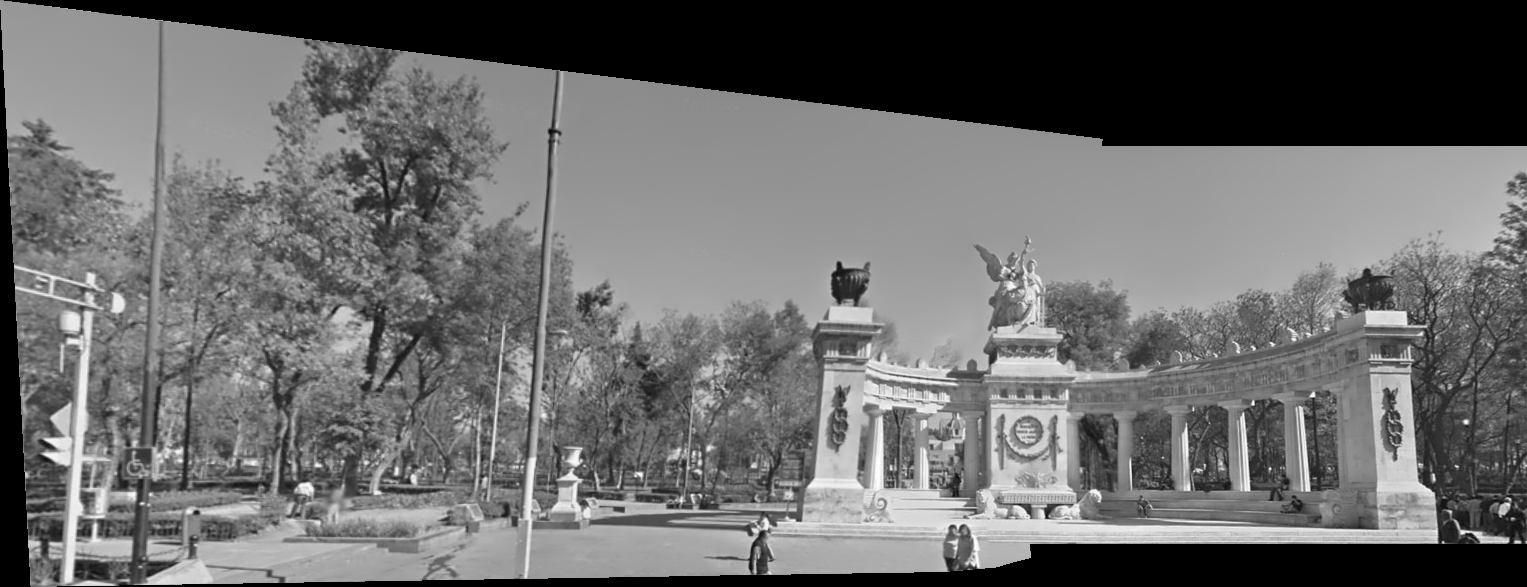

- For the image pair provided, report the number of homography inliers and the average residual for the inliers (squared distance between the point coordinates in one image and the transformed coordinates of the matching point in the other image). Also, display the locations of inlier matches in both images.

- Display the final result of your stitching.

Part 2: Shape from shading

- Briefly describe your implemented solution, focusing especially on the more

"non-trivial" or interesting parts of the solution. What implementation choices did you

make, and how did they affect the quality of the result and the speed of computation?

What are some artifacts and/or limitations of your implementation, and what are

possible reasons for them?

- Discuss the differences between the different integration methods

you have implemented for #5 above. Specifically, you should choose one subject,

display the outputs for all of a-d (be sure to choose viewpoints that make the differences

especially visible), and discuss which method produces the best results and why.

You should also compare the running times of the different approaches. For the remaining subjects (see below),

it is sufficient to simply show the output of your best method, and it is not necessary

to give running times.

- For every subject, display your estimated albedo maps and screenshots of height maps

(use display_output and plot_surface_normals).

When inserting results images into your report, you

should resize/compress them appropriately to keep the file size manageable -- but make sure

that the correctness and quality of your output can be clearly and easily judged.

For the 3D screenshots, be sure to choose a viewpoint that makes the structure as clear

as possible (and/or feel free to include screenshots from multiple viewpoints).

You will not receive credit for any results you have obtained, but failed to include

directly in the report PDF file.

- Discuss how the Yale Face data violate the assumptions of the shape-from-shading method

covered in the slides. What features of the data can contribute to errors in the results?

Feel free to include specific input images to illustrate your points. Choose one subject and

attempt to select a subset of all viewpoints that better match the assumptions of the method.

Show your results for that subset and discuss whether you were able to get any improvement

over a reconstruction computed from all the viewpoints.

Submission Instructions

You must upload the following files on Canvas:

- Your code in two separate files for part 1 and part 2. The filenames should be lastname_firstname_a3_p1.py and lastname_firstname_a3_p2.py. We prefer that you upload .py python files, but if you use a Python notebook, make sure you upload both the original .ipynb file and an exported PDF of the notebook.

- A report in a single PDF file with all your results and discussion for both parts following this template. The filename should be lastname_firstname_a3.pdf.

- All your output images and visualizations in a single zip file. The filename should be lastname_firstname_a3.zip. Note that this zip file is for backup documentation only, in case we cannot see the images in your PDF report clearly enough. You will not receive

credit for any output images that are part of the zip file but are not shown (in some form) in the report PDF.

Please refer to course policies on academic honesty, collaboration, late days, etc.