Transfer learnable features for ASL:

We have recently become interested in transfer learning, a body of

procedures that allow information obtained learning one task to be

transferred to another, related, task. Transfer learning is

important for activity recognition and understanding, because we do not

expect to have examples of particular activities at particular aspects to hand. We

have

used ASL recognition as an example domain. We build word models for

American Sign Language (ASL) that transfer between different signers

and different aspects. This is advantageous because one could

use

large amounts of labelled avatar data in combination with a smaller

amount of labelled human data to spot a large number of words

in

human data. Transfer learning is possible because we represent blocks

of video with novel intermediate discriminative feature based on splits

of the data. By constructing the same splits in avatar and human data

and clustering appropriately, our features are both discriminative and

semantically similar: across signers similar features imply similar

words. We demonstrate transfer learning in two scenarios: from avatar

to a frontally viewed human signer and from an avatar to human signer

in a 3/4 view. This method has been written up and submitted

for

publication. This work is joint with Ali Farhadi and Ryan White, and appeared as

Ali Farhadi, David Forsyth, Ryan White, "Transfer Learning

in Sign Language," IEEE Conference on Computer Vision and Pattern

Recognition, 2007

Finding: Our method is

remarkably effective. We train the method with two types of

data.

First, we use examples of some words in frontal view of an avatar, in

frontal view of a real signer and in 3/4 view of a real signer (shared

words). These establish comparisons between

features. Then

we train models of new words using

only examples in frontal view of an avatar (target words). We use these models to spot words in (a) a

frontal view of a real signer and (b) in 3/4 view of a real

signer. Our method has seen no example of these

words in

for any view of a real signer, but is still accurate at spotting and

classifying these words

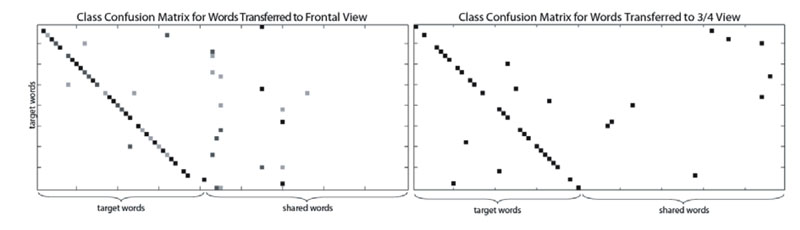

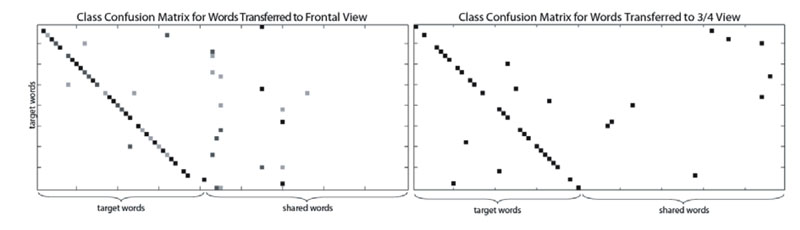

This confusion matrix establishes

that transfer learning is accurate, especially considering the

difficulty of the task (elements onthe diagonal indicate correct

classification while others indicate errors). Training for the word

model (p(c|w)) is done on the avatar and tested on frontal human data (left) and 3/4 view

human data (right).

This task is more challenging than a typical application because

wordspotting is done without language models (such as word frequency

priors, bigrams or trigrams).